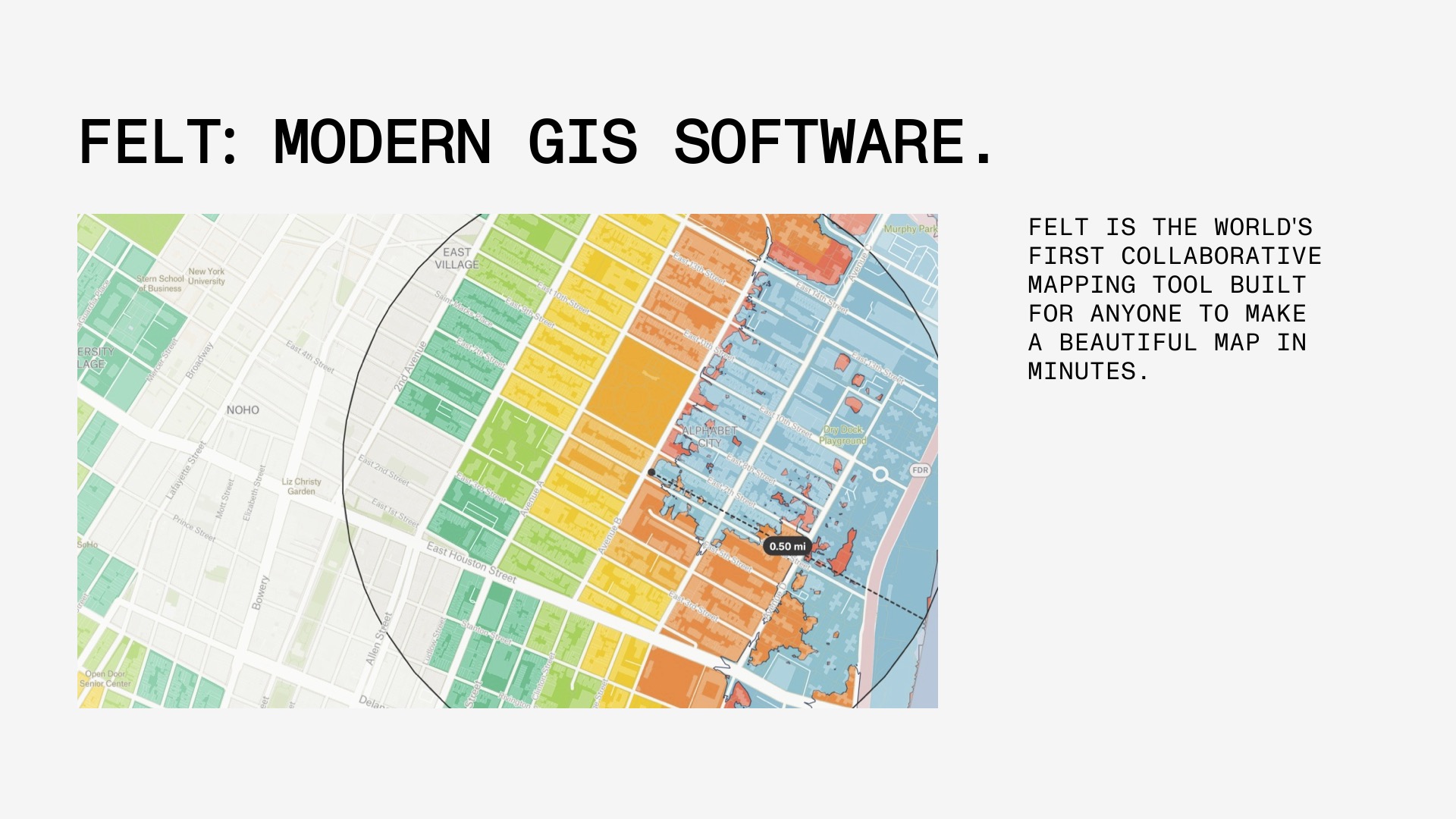

Felt is a modern GIS (Geographic Information System) company that helps businesses visualize their geospatial data and make beautiful maps. I joined as a product consultant in 2025 to help them solve their main growth challenge at the time, a low paid conversion rate. Together, we worked to understand the different customer segments, diagnose the low conversion rate, and build a sound growth roadmap.

When I joined Felt, they were having a lot of success but struggling with one metric: paid conversion rate. They had recently updated their entire onboarding flow and were flummoxed that the release had no impact on their activation nor paid conversion rates. So they brought me in to diagnose the low paid conversion rate and figure out how to improve it.

First some background. Felt first launched as an easy to use cloud mapping solution for the masses – much in the mold of what Figma had done in the design industry. Shortly before I joined, they shifted their focus from a broad consumer audience to GIS professionals and set a goal of unseating the dominant incumbent, ESRI. With this new target customer in mind, Felt launched two high priced plans (team, enterprise) to monetize the professional audience. It worked – revenue started to take off. However, paid conversion rate remained low and appeared to be a drag on growth.

After discussions with the team, it became clear that the new paid plans were a fit for only a small segment of their existing audience. However, the team didn’t have a clear understanding of who the plans were a fit for nor what percentage of their existing audience were in that segment. To resolve these knowledge gaps, we set out to gather more information about users during onboarding.

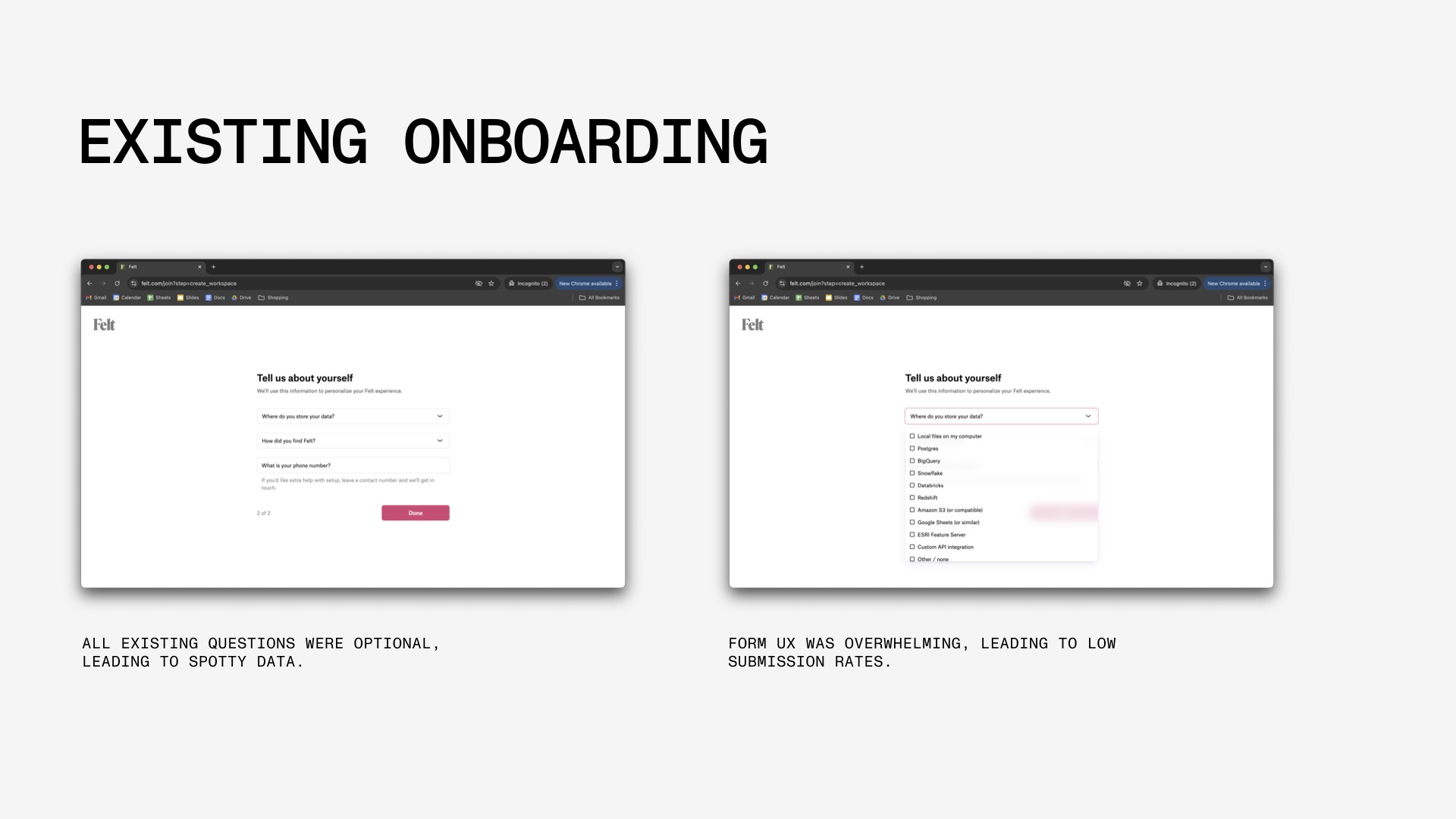

The existing onboarding questions had three major issues. First, the questions didn’t sufficiently differentiate between qualified and unqualified signups. Second, the questions were optional, leading to spotty data. And third, the UX was overwhelming which led to low submission rates.

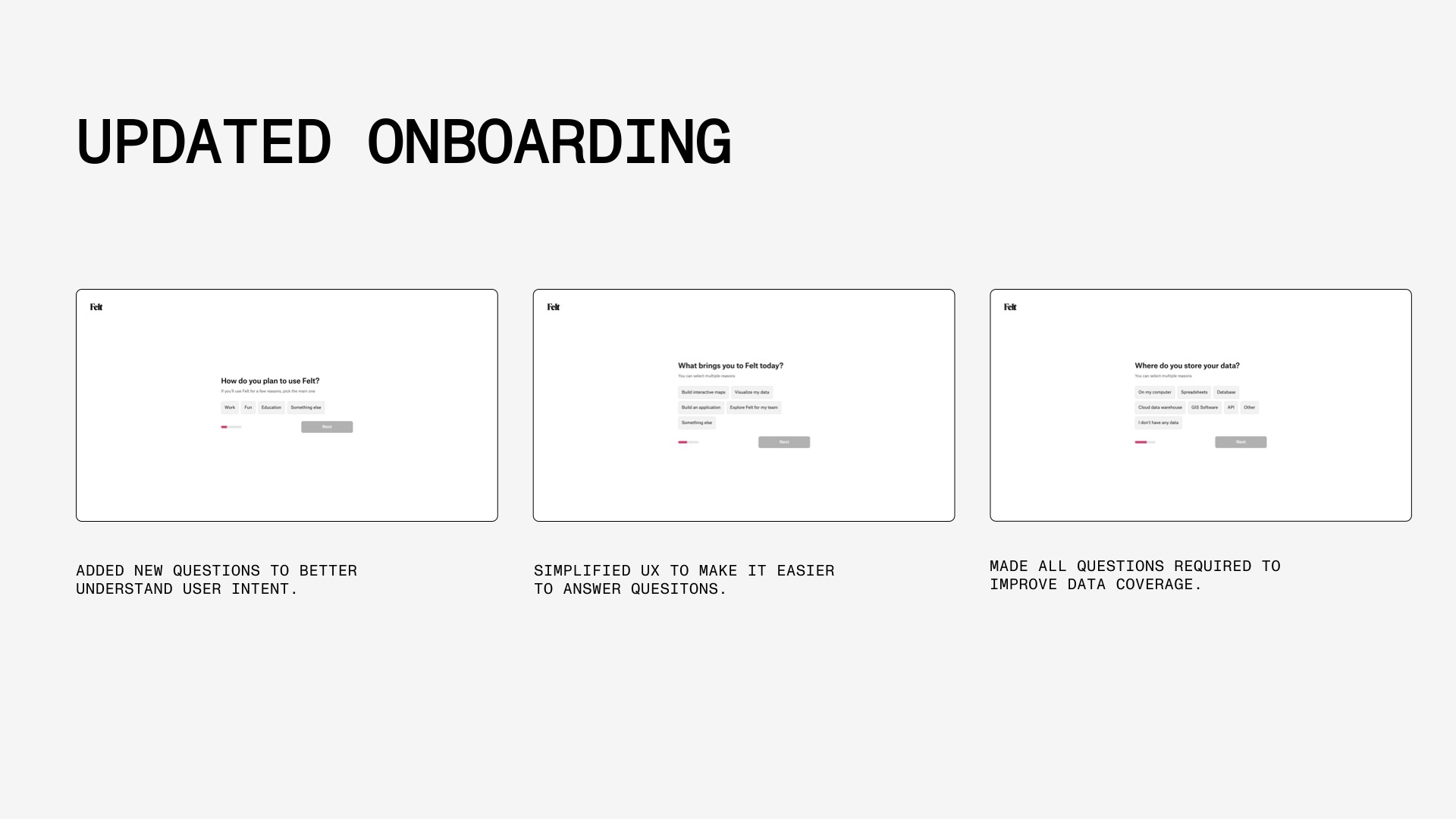

To resolve these issues, we added two new questions to better qualify users, replaced the difficult UX with engaging interactions, and made all of the questions required. The result was 100% data coverage for new users and a significantly improved understanding of user intent.

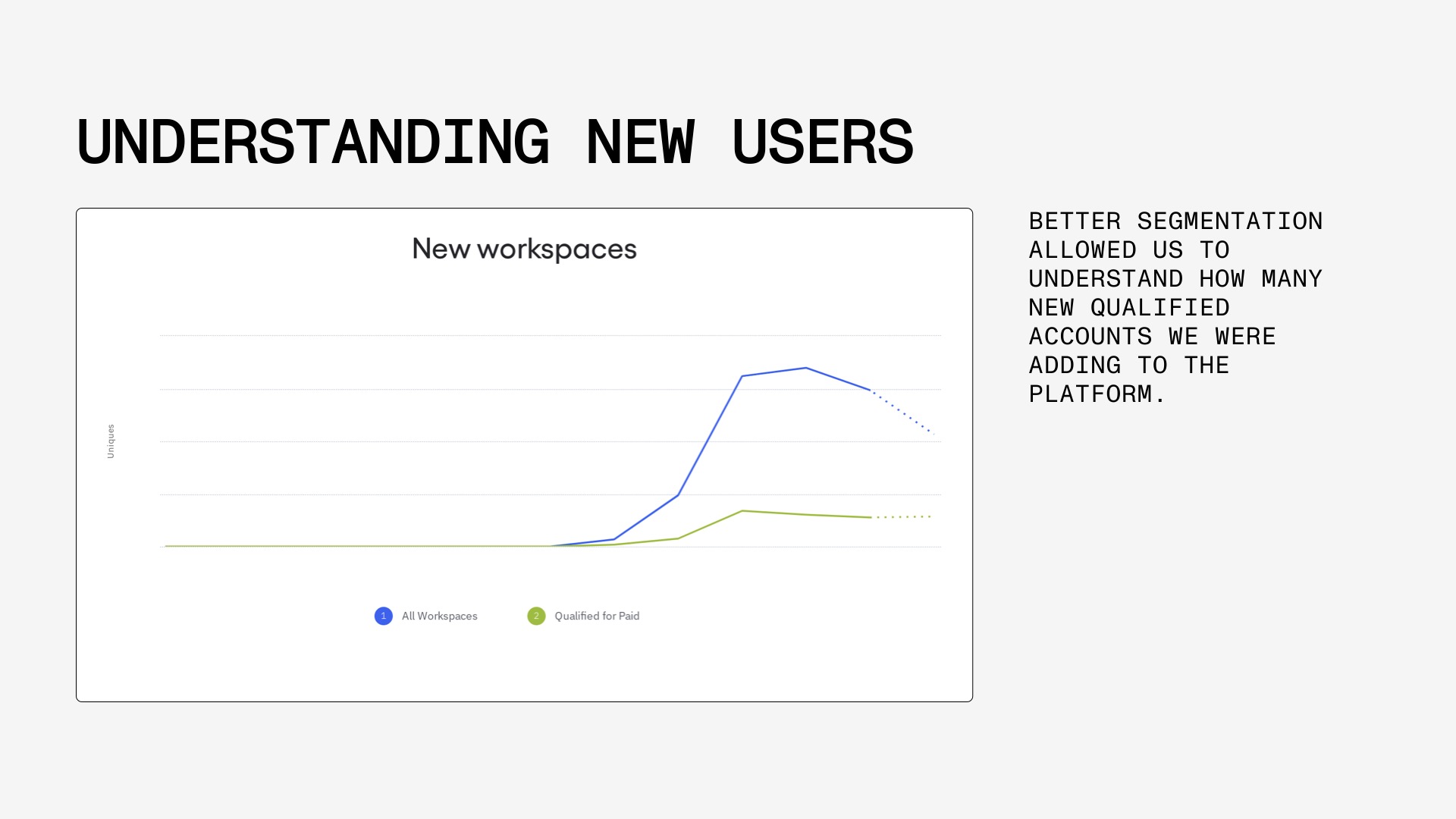

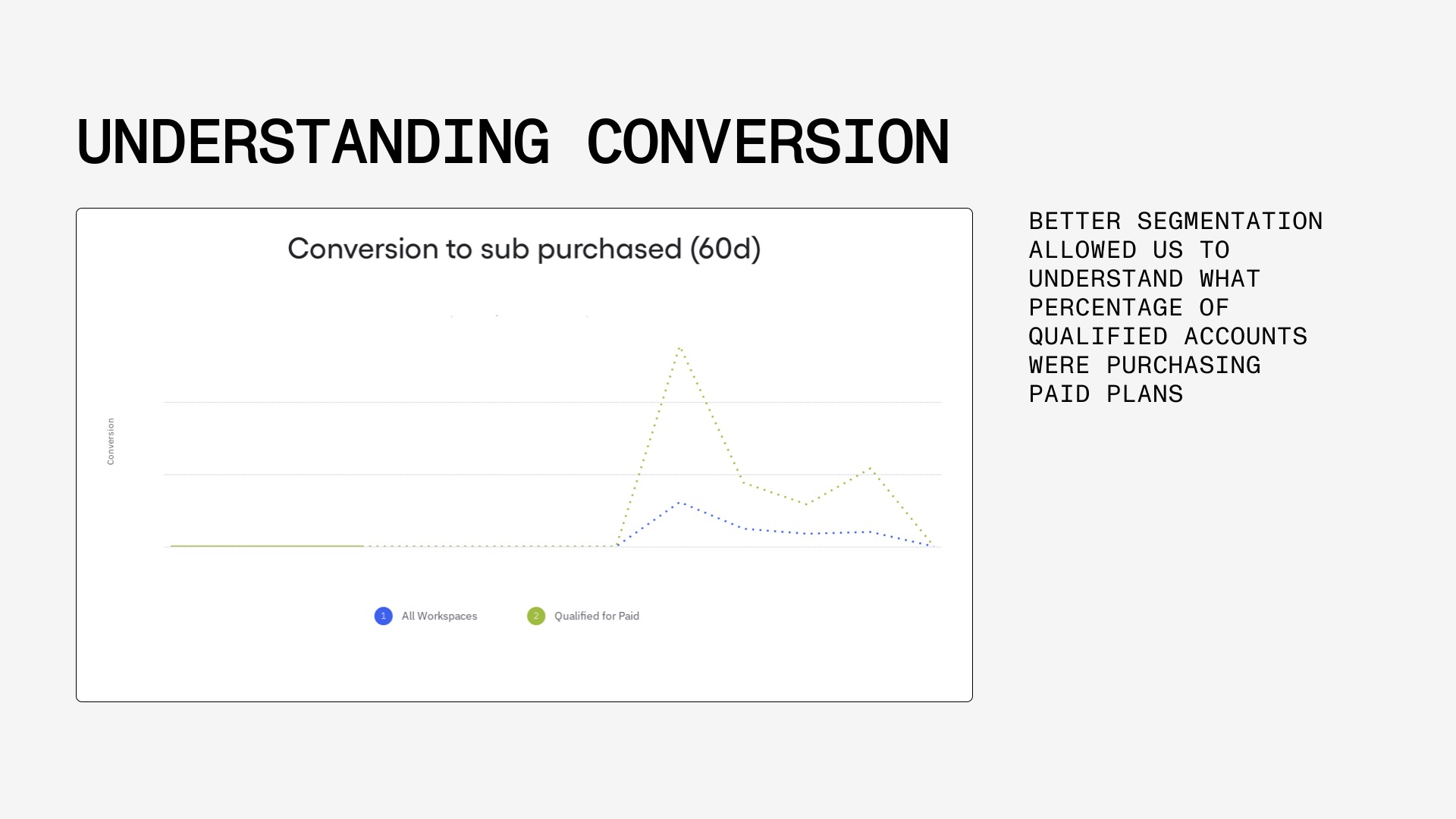

With this new data we were able to quantify two important numbers.

1. How many new qualified users were signing up each week.

2. What percentage of qualified users purchase a paid plan.

While I can’t share specific data, the new analyses showed that a minority of new signups were qualified for their paid plans. Those that were qualified purchased at a consistent rate that was at the low end of the healthy range. Importantly, this higher baseline conversion provided a more sensitive metric to measure changes to the product and acquisition strategy. It gave them a metric to aim for that they could actually impact.

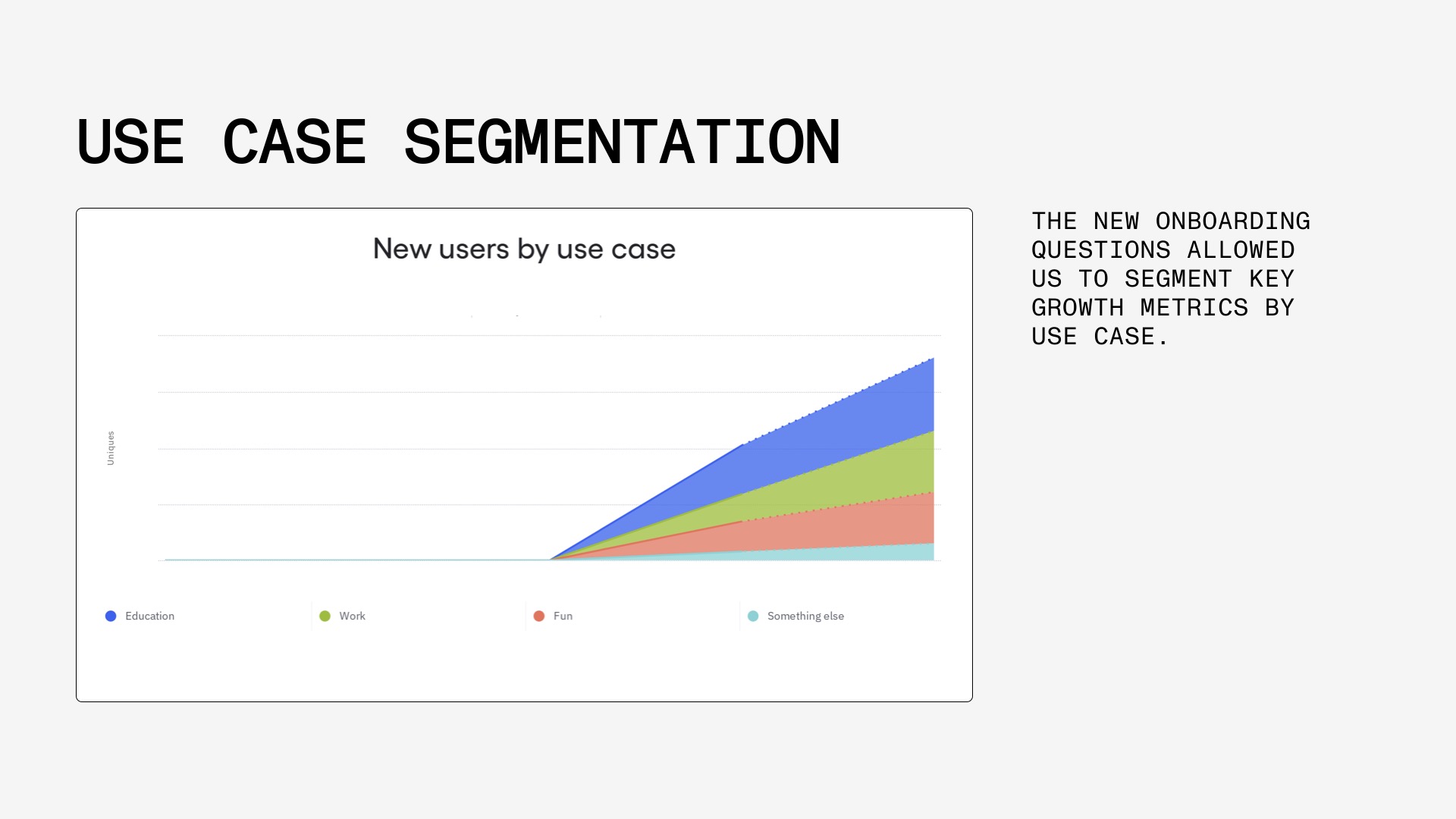

In addition to better understanding how qualified their users were, the new data unlocked new segmentations by use case and other important attributes. These analysis will help Felt reach their conversion goals in the short term and build better solutions for these audiences going forward.

All of this new data helped Felt unlock a deeper understanding of their users and move forward with their conversion roadmap, now equipped with more sensitive measurement. The analyses also opened up two new areas of growth: growing qualified signups and better monetizing other use cases (education, for fun).

Another challenge the Felt team faced before I joined was running successful A/B. In the past they had failed to get significant result because they were using an impossible conversion metric. Their historic experiments had used paid conversion rate for all users as their target metric. This didn’t work because paid conversion is a very hard metric to move and conversion was so low that the experiments needed a boatload of data to get a result.

To help the team gain confidence, we launched the new onboarding questions as an A/B test to understand if the addition of new questions would lead to any drop off. We used onboarding conversion as our measure because it provided a high volume of data and had high baseline conversion, which led to a quick experiment. In less than a week, we could say with some degree of certainty, that the new questions had no impact on onboarding conversion.